LyriFont

Interactive visual generation from song lyrics.

- A.A. Year: 2023-24

- Students

Noemi Mauri

Matteo Gionfriddo

Riccardo Passoni

Alessandro Ferrando - Source code: Github

Description

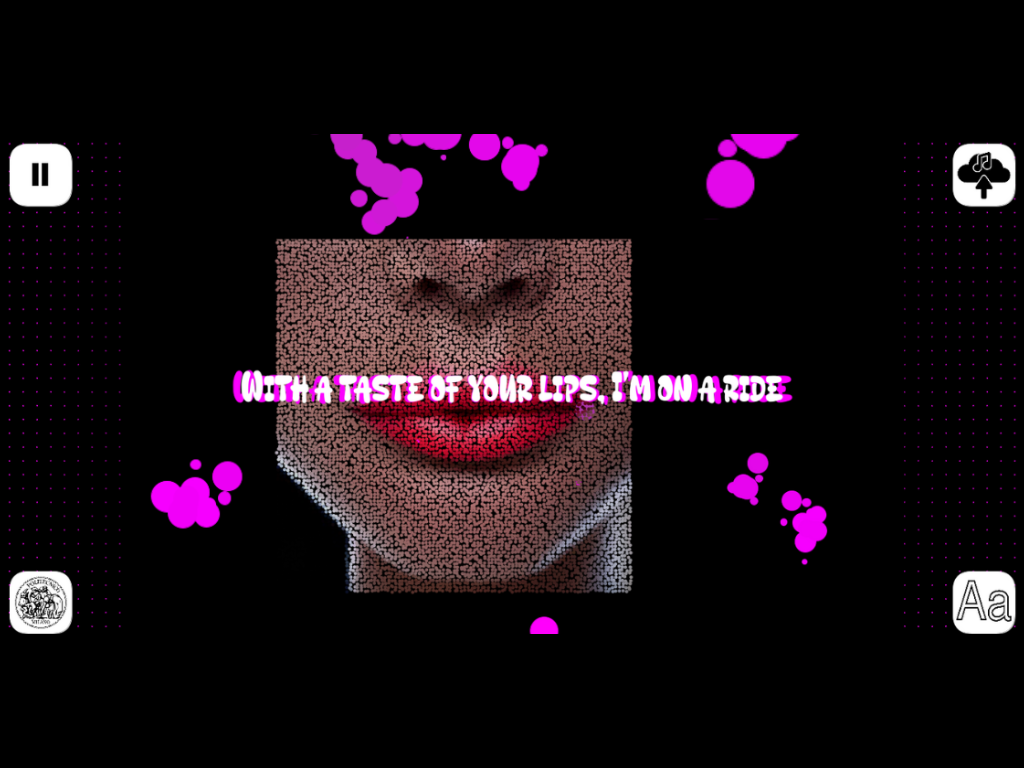

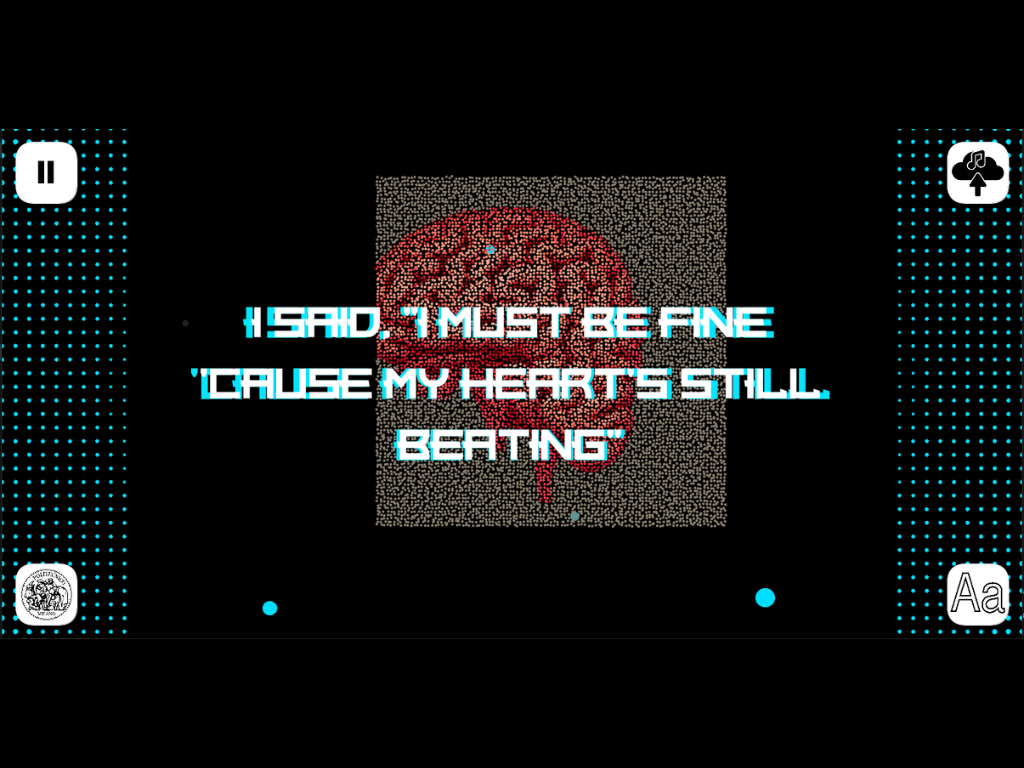

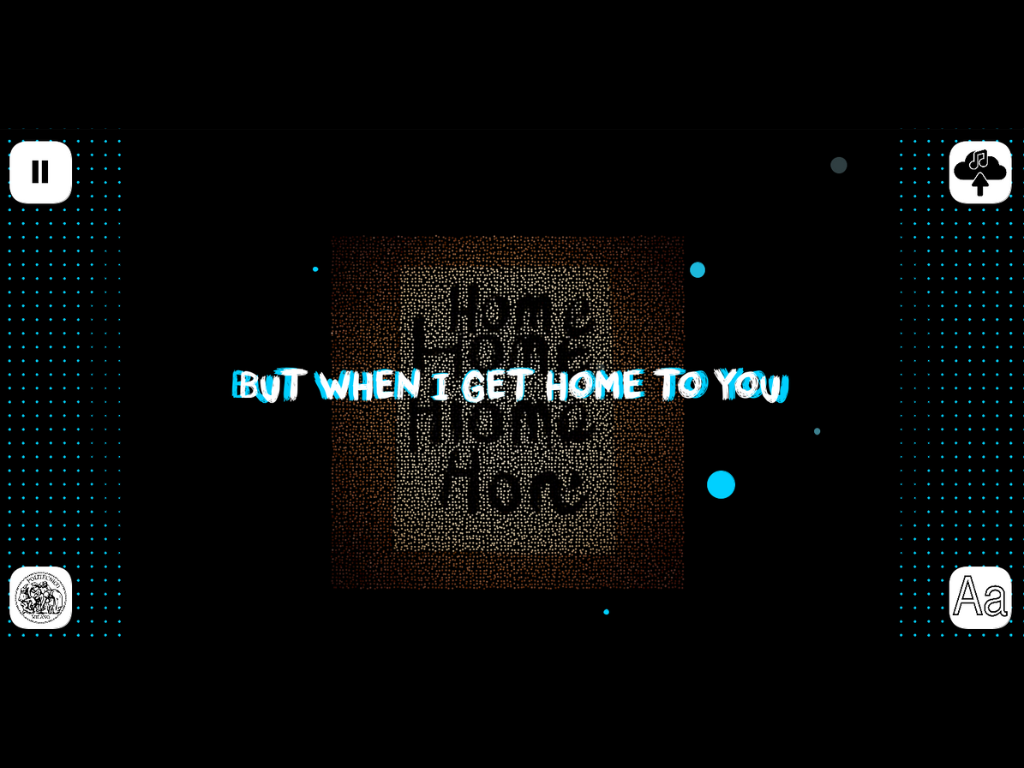

LyriFont aims to create an edge between Music and Visual Arts by employing different advanced technologies. The primary objective of LyriFont is to convey the distinctive mood and cultural essence of various music genres through visually representative typography. By doing so, we aim to deepen the user’s connection to the music and lyrics, making the experience more immersive and engaging.LyriFont is thinked as a potential feature for popular music streaming platforms such as Spotify or Apple Music. However, its versatile capabilities also make it suitable for other applications, such as interactive installations or visual displays for live concerts. LyriFont utilizes neural networks to determine the music genre of an input song and then associates it with a font that best matches the identified genre. Additionally, it generates an image based on the song’s lyrics, which is then dynamically modified by a particle system to create an engaging and interactive visual experience.

Challenges, accomplishment and lessons learned

The project presented several challenges, particularly in its innovative nature, where the lack of existing literature about the unique connection between fonts and music genres did not give us a path to follow. The implementation of Neural Networks for genre recognition posed another significant challenge, as we navigated through various possibilities in feature selection and network architecture to find the most reliable solution. Despite these challenges, our collective effort led to several accomplishments. We developed a functional system that matches fonts with music genres based on song lyrics, showcasing our dedication and problem-solving skills. Working in a team allowed us to pool our diverse skills and perspectives, facilitating the discovery of creative solutions. Effective communication among team members played a crucial role in overcoming obstacles and refining our ideas.

Technology

The two main technologies used in our project were Python and Processing, which communicate via

the OSC (Open Sound Control) protocol. In this setup, Python acts as the server, handling music

genre classification, while Processing serves as the client, managing the visual display.

In Python, we implemented music genre classification using LSTM (Long Short-Term Mem-

ory) Neural Networks. This involved extracting and processing musical features, which were essential

for training the model. We leveraged the TensorFlow library to build and refine our machine learning model. Additionally, we utilized syncedlyrics to retrieve song lyrics, spaCy for keyword extraction,

and the Hugging Face API to generate images based on these keywords.

Processing receives OSC messages from Python, containing fonts and lyrics, and renders

them with dynamic visual effects. These effects include blob generation, which is created using genetic

algorithms influenced by user interactions and music features. This setup allows for an engaging and

interactive visual representation of the lyrics in sync with the music genre.

Students

Noemi Mauri: Image generation and keyword extraction, processing visual effects.

Matteo Gionfriddo: Neural Networks implementation, features mapping with visual effects.

Riccardo Passoni: Visual Effects with Genetic algorithms, user interaction, bug fixes.

Alessandro Ferrando: Starting idea, Neural Network fixes, fonts.