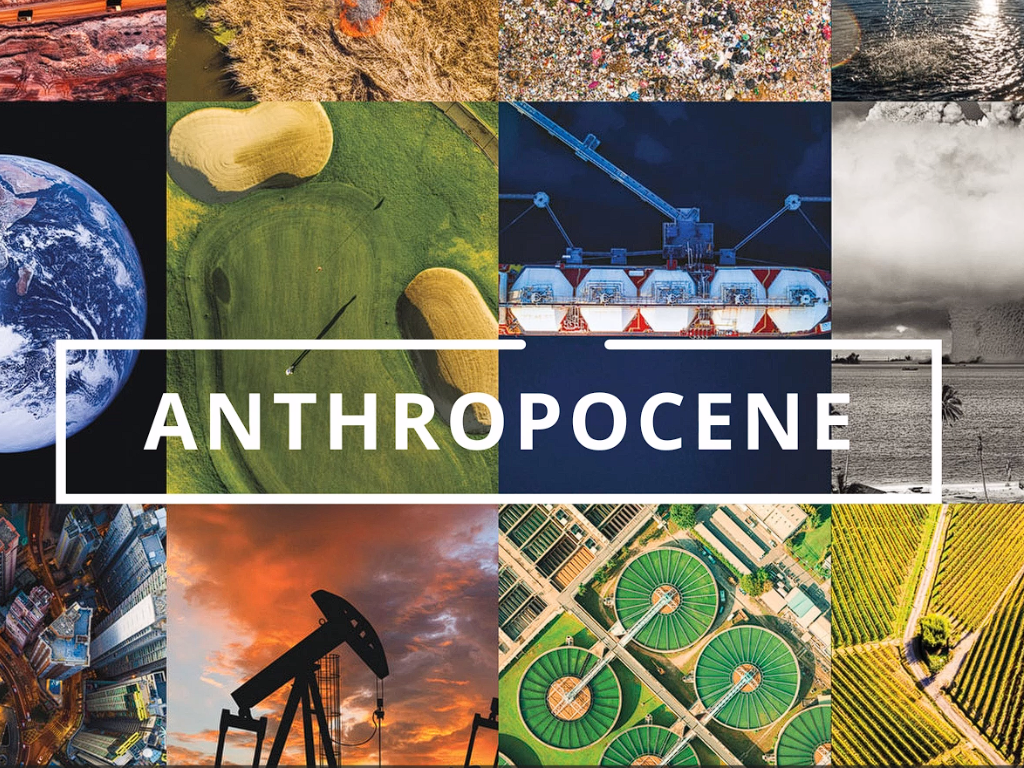

Anthropocene

An immersive audiovisual installation simulating humanity's transformative impact and progressive erosion of the natural world.

- A.A. Year: 2025-26

- Students

Filippo Paris

Matteo Di Giovanni

Alessandro Mancuso

Emanuele Turbanti - Source code: Github

Description

Anthropocene serves as a bridge between scientific observation and cultural philosophy, manifesting as an interactive audiovisual environment that vividly reconstructs how human presence reshapes the Earth's biosphere. Envisioned as an artistic installation with a generative environment driven by real-time sensors and AI, the project forces a direct confrontation with the reality of how human presence alters and reshapes the natural environment. The user experience begins in a state of pure nature, immersing participants in pristine visuals and sounds, but as camera-based sensors track their movement, the system responds with a progressive metamorphosis: the landscape decays into urban forms and industrial noise, directly reflecting the audience's reshaping influence. This dynamic evolution is designed to foster emotional resonance and intuitive responsibility, inviting viewers to move beyond judgment and engage in deep reflection on their relationship with our world.Our biggest challenge was managing the high computational cost of Stream Diffusion models, which forced us to rely on remote servers. Additionally, ensuring smooth, seamless transitions between scenes was a critical technical challenge to preserve the immersive flow without latency breaking the illusion. We are proud of achieving full portability, designing a multisensory experience that adapts to any environment using only a projector and a camera. Furthermore, we successfully integrated a complex, diverse stack of libraries and programs to create a unified, responsive system. During the project, we learned the use of Stream Diffusion and TouchDesigner, using these advanced environments to create dynamic generative art. Moreover, we learned how to construct an artistic experience, moving beyond code to apply critical design principles that shape the global user journey, ensuring the project functions not just as technology, but as a meaningful medium for reflection and awareness.

Technology

TouchDesigner, Supercollider, Reaper, OSC, Python,

StreamDiffusion, Daydream API, Ultralytics Yolo, DMX, Art-Net,

Dataset Images, QLC+, Syphon (MacOS)

Students

Filippo Paris: conceived and pitched the project vision, designed the audio layers and Supercollider, implemented the Python scripting and OSC mapping.

Matteo Di Giovanni: camera sensing, background removal for avatar configuration, image dataset curation, prototype of sound layering displayer, prompt engineering.

Alessandro Mancuso: camera sensing and object detection, Python scripting and OSC mapping, DMX design and connection, user experience design, development of the logic behind TouchDesigner functioning (prompt mixing, source image retrieval, lighting behavior).

Emanuele Turbanti:: Touchdesigner, Daydream API, StreamDiffusion Logic, DMX implementation and Art-Net connection, UX Design, Syphon connection.